Directory

Helpful Links

To Address Next Meeting

- ...

Meeting Notes

2021-06-10

Agenda:

2021-06-03

Attendees: Burke, Allan, Bashir, Mike S, Suruchi, Tendo, Grace P, Joseph, Jen, Daniel

Updates:

- Suruchi worked on inconsistent naming of parameters between streaming and batch pipeline

- Alan focused on getting 5 sample PEPFAR indicators working

- Alan & Bashir to try running at Ampath over the weekend; plan for next week is to get datawarehouse batch ready with sample data from the last 3 months and test against the indicator library

Bashir proposal: Skip thursday calls if nothing to discuss

- Check for agenda items on Slack on Wednesdays. If no agenda items or only updates, then Thursday call can be canceled. (Bashir to own)

- https://om.rs/aesnotes You can enter agenda items here

Resources for demo data

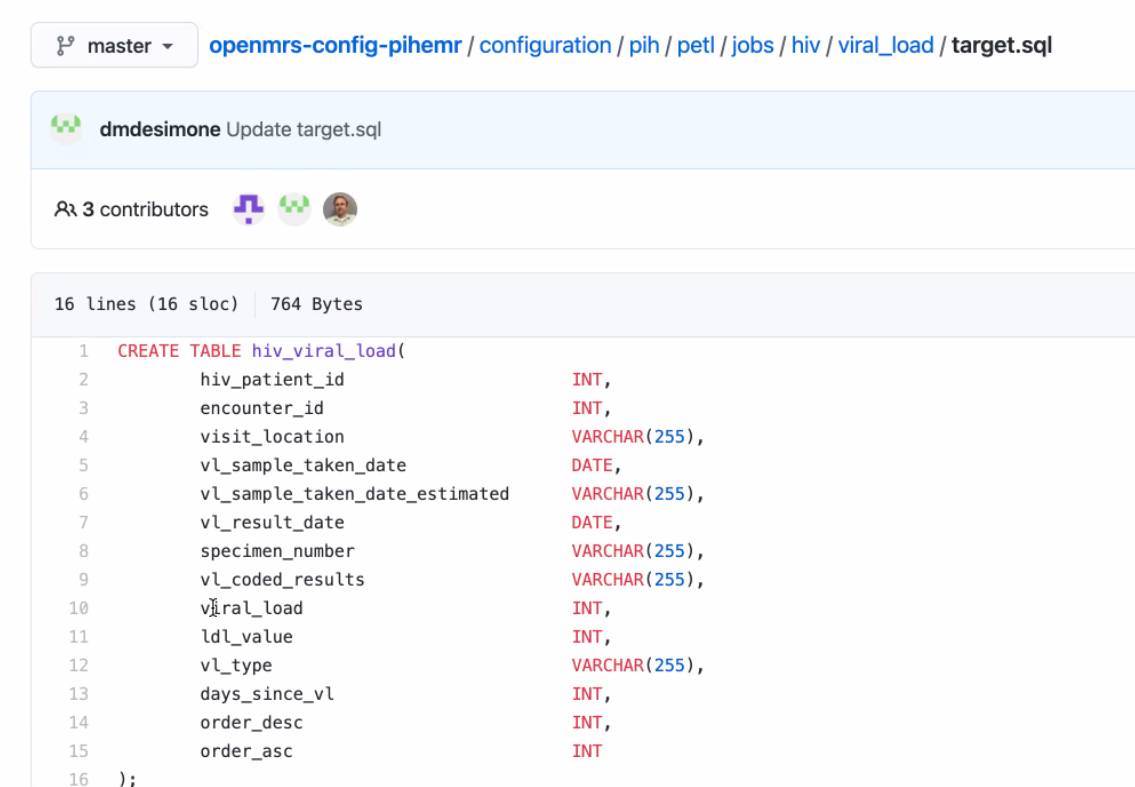

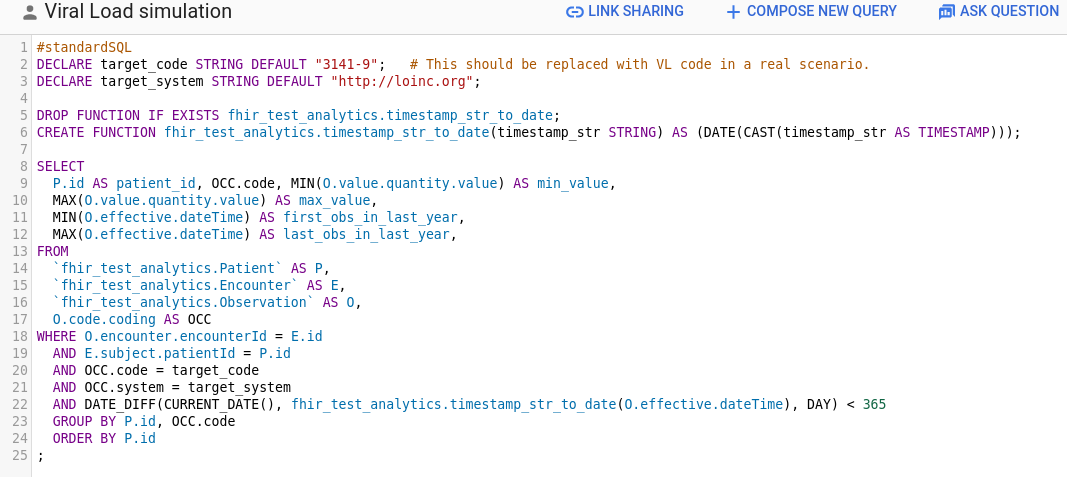

- PIH viral load report: https://github.com/PIH/openmrs-config-pihemr/blob/master/configuration/pih/petl/jobs/hiv/viral_load/

- Indicators: Analytics Engine (including ETL and reporting improvement)

https://github.com/GoogleCloudPlatform/openmrs-fhir-analytics/projects/1

2021-05-27: Memory Issue & Streaming Updates; Q&A

Attendees: Suruchi, Bashir, Tendo, Mike, Daniel, Grace P, Burke, Amos Regrets: Alan

Updates:

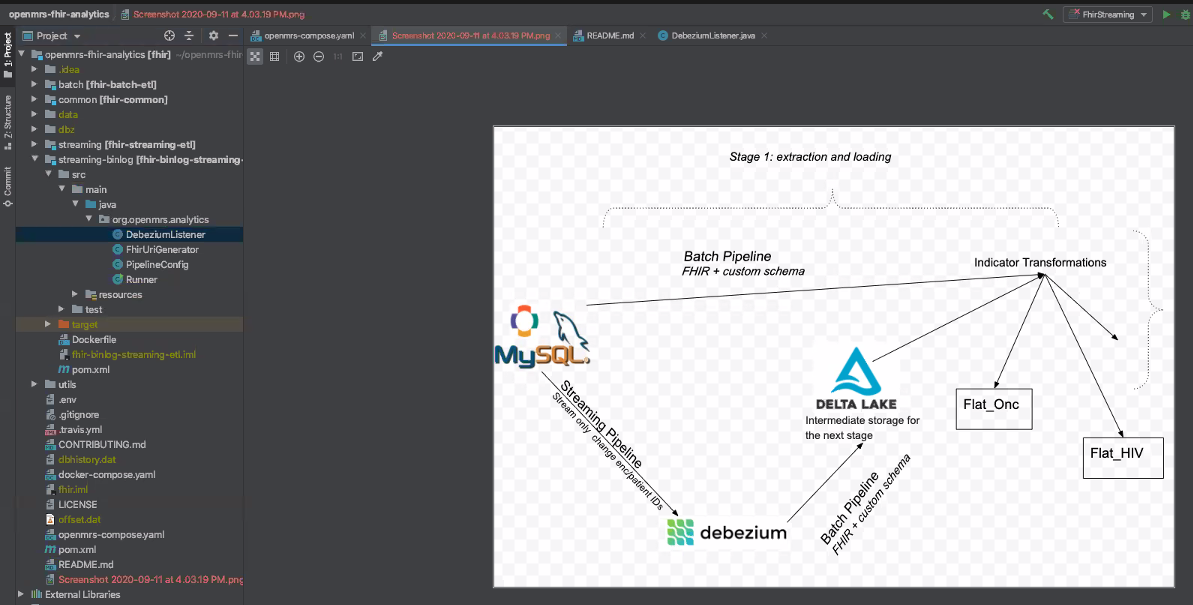

Bashir: (1) Memory issue being addressed by writing parquet files quickly after fetching resources → Need to test at AMPATH over a weekend. (2) Consolidating streaming pipeline. Any new resources that come from EMR are handled by streaming branch; at same time in parallel, starts fetching from historical resources.

Questions:

- Will warehouse contain flat files, that people with MySQL experience could query?

- Data are pulled into the warehouse as FHIR resources into a SQL on FHIR schema

- The SQL on FHIR data are then written into Parquet files

- Are updates to the warehouse incremental, so when a new record comes in things are auto updated?

- The analytics engine is being designed to support both batch mode (to bootstrap or to re-generate the data warehouse) and incremental updates (as typical use case of streaming changes to the warehouse in near real-time)

- There is a notion of "pages" where resources are loaded in small batches before being flushed. The paging can be configured based on time (e.g., flushing each minute, each hour, each day). Larger pages can improve performance at the cost of more memory and a longer delay in data getting into the warehouse.

- Can the solution work in an offline mode - can it run at a facility without internet connection, so evaluation staff can still pick data from the warehouse and process further?

- Yes. The analytics engine is designed to run against an instance of OpenMRS and doesn't require internet access to transform the data.

Review of PIH Examples

- https://github.com/PIH/openmrs-config-pihemr/tree/master/configuration/pih/petl/jobs

https://github.com/PIH/openmrs-config-pihemr/blob/master/configuration/pih/petl/jobs/hiv/viral_load/target.sql

2021-05-20:

Attendees: Amos Laboso, Burke, Bashir, Ian, Jennifer, Grace, Mike, Suruchi, Moses

Regrets: Alan

Summary:

Updates:

Bashir: FHIR resources being kept in memory for too long due to local runner. Bug caused by OMRS modelling. Thinking we shouldn't pursue since cluster use-case doesn't seem prevalent. Deciding whether to run through just local or provide option for either Distributed vs local system

Using the FHIR→ parquet files approach s consuming a lot of memory and slowing us down b/c we're running into erros and memory issues. Needing to cluster by picking from OMRS and putting into parquet files is not a work around. Trying to stay cloud ready as long as we can function well on a single server. h

Smaller sites & offline

2021-05-13:

Recording: https://iu.mediaspace.kaltura.com/media/t/1_6m0e3ubn

- Proposal: Call time change to 1 hr later? (switch with MFE squad)

- Bashir would have a conflict on some weeks

- Updates

- Ampath: Active & historical datasets. Not able to run historical: issues running out of memory. Solution proposal: ___

- Bug: Loading FHIR resources many times - haven't been able to reproduce

- Time based disaggregation into indicator

- Could we demo using engine to create a flattened file?

- General approach is (1) OMRS → FHIR warehouse. (2) Create a library on top of the FHIR data warehouse so people can query easily. Part of this would include producing the flat tables.

- Would create views on FHIR data warehouse; those come from the library. (Dependent on performance of those views.)

- Goals

- Able to run pipelines at AMPATH scale.

- Show the libraries are performant at AMPATH scale. → End Goal this Quarter: System that can supplement current AMPATH ETL/reporting pipeline (start supporting production-level reporting needs for AMPATH)

- How can we get other implementations involved/interested in Analytics Engine development?

- Knowing use cases / pain points would be very helpful for analytics engine work at this point

- PIH has a working solution, but would like a solution that...

- Provides consistency across different implementations (all have different reporting solutions)

- Avoid having to rebuild warehouse tables from scratch

- The analytics engine can work in batch or incremental mode. Batch is probably better when it performs sufficiently for business needs. Streaming/incremental mode introduces complexity.

- The analytics engine is using FHIR to standardize clinical data. Not all data within OpenMRS will fit into the FHIR model. Currently, the Analytics Engine team is prioritizing FHIR schema data for now.

- Will plan on discussing technical approach choices on next Tuesday's call

2021-05-06:

- Updates

- Ampath: Deployed!! Saturday doing data extraction, now focusing on indicator generation for PEPFAR indicators. Then should be able to achieve one of the main goals for this quarter.

- Organizing resources in datawarehouse

- Incremental generation of parquet files for resources + debugging

- Ampath: Deployed!! Saturday doing data extraction, now focusing on indicator generation for PEPFAR indicators. Then should be able to achieve one of the main goals for this quarter.

- Reporting Module Project Proposal

- Will reach out on Talk or in Slack

- ETL / Flattening update from PIH/Mike - "if we were to create a flat table, how would that look"

- Mike Seaton demonstrated how PIH is using combinations of YML and SQL files to ETL data from MySQL into a data warehouse (SQL Server)

- https://github.com/PIH/openmrs-config-pihemr

- Primary focus is to get data into flattened format so data analysts can understand and use it

- Mike Seaton demonstrated how PIH is using combinations of YML and SQL files to ETL data from MySQL into a data warehouse (SQL Server)

2021-04-29:

Want to hear from other people; open new perspective. Without having a dedicated Product Manager, need reps from other teams to bring their problems and efforts to address. Otherwise without PM, dev leads can't take on burden of going around and understanding others' problems.

- PIH

- Using YAML and SQL to define exports that pull data from OpenMRS to a flat table that PowerBI can use

- OHRI - UCSF

- COVID

Ampath run updates

Dev updates

...

AES has (to date) been the combination of three organizations/projects working together on an analytics engine to meet their specific needs:

- AMPATH reporting needs

- PLIR needs

- Haiti SHR needs

MVP

- System that can replace AMPATH's ETL and reporting framework

TODO: Mike to create an example of "if we were to create a flat table, how would that look"

2021-04-15: Squad Call

https://iu.mediaspace.kaltura.com/media/t/1_80xjwir1

Attendees: Debbie, Bashir, Daniel, David, Burke, Ian, Jen, Allan, Natalie, Tendo, aparna, Mike S, Naman, Mozzy

Welcome from PIH: Natalie, Debbie, David, Mike

- M&E team get a spreadsheet emailed from Jet.

- Issues with de-duplicating patients.

- Datawarehouse created for Malawi being redone.

- Recent projects have taken different approach - M&E teams analyze the KPI requirements, adn then design the SQL tables they'd want, then they do an ETL table to have exactly what they'd want for PEPFAR reporting and MCH reporting.

- Past approach was too complicated; didn't promote data use.

Details of past situation

- Previously you'd go into OMRS and you'd export and download an Excel spreadsheet. Exports started to be more and more wrong. Wrong concept, or new concepts added that weren't reflected in exports; people lost trust. To see how things were calculated was very opaque.

- 7 health facilities into one DB; another 7 into another DB; then the 2 DBs merged together in reporting (which is done on the production server of one of those sets b/c there's not a 3rd server, but a new one is being set up for reporting.)

- They were hoping to set up on Jet but seems proprietary, expensive, and old; starting hosting clinical data in this 2 yrs ago. Started pulling in raw (or flattened?) tables - patient tables, enrollment tables. Pull those into the Jet DW

- COVID started and they also weren't able to travel to sites to do training on Jet.

- Switched back to Pentaho & MySQL - used before, open source. Hired 2 new people on Malawi team who were comfortable with that tool and could build their own warehouse in there.

- Main need: 1 table per form. So however concepts organized into a form, needs to make sense as a table unit. Each form - 1 table.

- Would like to add things like enrollment, statuses.

- Why? Forms = intuitive unit; indicator calc usually like obs at last visit,

- 80% Doesn't usually require cross-visit info

- KEMR similar - created a table to automate 1 tbl per form

- Previous tables were extremely wide because it was all the questions we'd ever asked. Would cut that up into multiple tables.

- PowerBI dashboards will be connected to these 1-form tables.

- Volume: Malawi: 50,000pts, a few million obs. Haiti: 500,000-1m, 10's millions of encounters, x4-5 for obs.

- Time: 5-10 mins to run. But in some other servers it's longer and a barrier, e.g. over an hour and increasing by the day - so that's why they're moving away from full DB flattening to one that's more targeted based on what data you're looking for.

- Ultimate Goal: Anything that makes it easier, esp. for local M&E people, to get their hands on the data and make the reports, is great. Easier access, and automation that reduces the workload of local M&E.

Overview:

- Targeting Ampath, because: 10's of clinics supported in a central DB. Has a complicated ETL system that doesn't scale very well, complex so few people understand it, and where the data came from is too opaque to instill sense of trust; want to replace it. M&E team needs flattened data. Want to flatten data into analytical tables that can be converted into tables like excel that they can run their own reports on.

- next, want to try supporting someone who is distributed, and then centralize the data.

- Design goals:

- Easy to use on a single machine/laptop. Whole system can be run on signle machine.

- ETL part: 2 modes of operations, batch mode and streaming mode.

- Reporting part:

Questions

- if you have any other questions for what might be useful for an M&E/Analytics person please email me at nprice@pih.org

- What are the best ways forward for PIH to try this and apply to some of their mor challenging use cases? E.g. local M&E teams not showing analytics of things happening over time

- Follow up to figure out how to create the FHIR resources...

2021-04-01: Squad Call

Attendees: Bashir, Moses, Tendo, Burke, Daniel Ian

Regrets: Allan, JJ, Grace P, Cliff

- Bashir: working on date-based filtering, as a way to get data only for "active" patients within a date range (e.g., all data for "active" patients within the past year)

- Given a list of resources and a date range, we pull all dated resources within the date range

- For all pulled resources that refer to a patient, we pull all data for each patient

- Moses: working on a blocker, inability to pull person and patient resources for the same individual in the same run (#144)

- Tendo: working on implementing media resource, working through complex handler

- Cliff (in absentia) working on running system via docker command

- Skipping meeting next week due to OpenMRS 2021 Spring ShowCase

2021-03-25: Squad Call

Attendees: Bashir, Allan, Cliff, Daniel, Ian, JJ, Allan, Ivan N, Willa, Grace P

Recording: https://iu.mediaspace.kaltura.com/media/t/1_msvydoso

Summary:

1. Decision: New design for date-based fetching in the batch pipeline, based on active period (for each patient "active" in care, all the resources will be extracted). We defined an "active" period (the last year), and break down the fetch into 2 parts: (1) any obs from the last year, then (2) for each patient with a history in the active period, all of their history will be downloaded. Why: Avoids search query time-out problems.

2. Later: Add ability to retrospectively extract resources back in time (re-run pipleline for any time period) in order to update your datawarehouse.

Updates:

- Bashir: Close to fix for memory issue; working on date-based

- Allan: Trying to finalize orchestration of indicator calculation. Implementing using docker & docker compose. Streaming mode.

- Antony (regrets): Working on list of indicators that need baseline data.

- Cliff: Streaming mode script. Refactoring in batch mode.

- Mike: Experimenting with approach, more updates next week.

Current plan for implementing date-based fetching: Getting the data when you need it, not all at once.

- Implemented on FHIR search base path. 2 modes for batch: pipeline JDVC mode, and FHIR search base.

- First step allows qualifying "I need last year"

- List of pts who've had >=1 obs in last year

- Second download, we download all of the resources going back.

- This will avoid the search query time-out problem we ran into in past. Idea is to download small batches in parallel.

Concern raised by JJ:

Approach needs to be able to handle queries that involve all patients, and need to be able to prove findings (i.e. can't simply use an aggregate deduction model, because you can't show "here are the exact patients" if someone needs to confirm your numbers are correct).

2021-03-18: Squad Call

Attendees: Bashir, Allan, Mike Seaton, Grace P, Christina, Burke, Cliff, Daniel, Ian, Ivan N

Recording: https://iu.mediaspace.kaltura.com/media/t/1_qj4xzv9t

Summary: What do we do with big databases with batch mode (where it's not feasible to download everything as FHIR resources or it will take a very long time)?

Two approaches came out to address the above problem:

1. First approach is limit by date (last week's discussion focused on this)

2. Second approach is to limit by patient cohort (focused on this today; idea is you'd limit queries based on patient instead of based on date, e.g. "Fetch everything for a cohort of patients") → We think this will be a better approach to try out moving forward.

Updates:

- PIH looking for Analytics Solutions, Mike's reviewed ReadMe, interested in trying out. Next: ______

- Bashir: Decision to do date-based separation of parquet files. Planning to go back to the FHIR-based search approach.

- Google contrib Omar: another 20%er from Google who is contributing PRs. Added JDBC mode to E2E tests.

- Google contrib William: still doing ReadMe changes/refactoring and move dev-related things under the doc directory.

- Allan: Instead of using distributed mode, use local mode.

- Cliff: Looking at datawarehousing, & issue of fhir resources in DW.

- Mozzy: Bug fixes so it can be used w/ Bahmni.

Discussion of Challenges:

FHIR structure isn't causing delays, but the conversion of resources into FHIR structure is slowing things down. TODO: Ian to look into improvements that could be made in FHIR module.

2021-03-11: Squad Call

Attendees: Bashir, Antony, Daniel, Grace, Allan, Moses, Tendo, Ojwang, Ken, Jen

Recording: https://iu.mediaspace.kaltura.com/media/t/1_lskh65vm

Summary: Discussed memory problems & performance in Ampath example. Direction we're taking for Ampath: To divide DW based on date dimension. For most of the resources, we don't generate for the whole time, just the last 1-2 yrs. Then we need to support special set of codes, where we generate the obs just for those codes. The set of those obs is much smaller.

- Work Update

- Bashir: Code reviews & investigation of memory issue. Added JSON feature into pipeline since it was helpful w/ debugging.

- Allan: Working to dockerize streaming mode, how to register indicator generation.

- Suggestion from Bashir: Adding more indicator calculation logic would be great.

- Moses: Upcoming PR w/ bug fixes

- Sending too many requests: had been looking at moving to using POST but fetching 100 resources at a time takes a few seconds, and can flood MySQL and OMRS, so doesn't see much value in fetching 1000's resources at a time on a local machine. 90 resources was actually faster.

- 2 other new contributors:

- Engineers at Google can spend 20% time on other projects. Posted some projects a few weeks ago related to this squad.

- Omar working on adding JDBC mode to batch end-to-end test

- William working on documentation fixes; trying to keep README user-centric rather than dev-centric. Anything dev-centric goes to separate files.

- Engineers at Google can spend 20% time on other projects. Posted some projects a few weeks ago related to this squad.

- Memory Issue with fetching encounter resources at AMPATH

- Compile list of IDs to be fetched; send 100-resource-request at a time to OMRS; convert those into Avro, and write into a parquet file. So because there is no shuffle anywhere, should continuously work regardless of the size of DB, and the memory requirement shouldn't increase.

- Investigated:

2021-03-04: Squad Call

Recording: https://iu.mediaspace.kaltura.com/media/t/1_yyg2els7

Attendees: Allan, Bashir, Moses, Antony, Cliff, Daniel, Ian, Jen, Ken, Sri Maurya, Steven Wanyee

Updates

- Bashir:

- Coverage reports added to repo.

- Bug identifying, & fix is out (not generating parquet; adding a flag to set number of shards)

- Fetching initial id list: Realized this isn't being slowed down by FHIR search or JDVC mode. Turns out it was the generation of Parquet - causing order of magnitude slower.

- Beam: Distributed pipeline environment. It's deciding how many segments for the output file to generate. Seems like an issue with Beam, Bashir to report to Beam.

- More Google people expected to start contributing eventually - up to 20 people

- Allan

- Moses

- Antony to follow up with Bashir and Moses to setup on machine & to join Tuesday check-ins

- Apparently there has been some testing of CQL against large data sets with Spark

- Intro from Maurya Kummamuru: Extracting OMRS data w FHIR to perform CQL queries against it. Demo coming at FHIR squad next week Tuesday.

2021-02-25: Squad Call

Recording: https://iu.mediaspace.kaltura.com/media/t/1_fa0o9440

Updates: Substantial bug fixing needed for Ampath pipeline pilot; 2.5hrs for table with 800,000 patients too slow.

2021-02-18: Squad call

Recording: https://iu.mediaspace.kaltura.com/media/t/1_m0rqigrl

Attendees: Burke, Tendo, Ian, Bashir, Amos Laboso, Cliff, Daniel, Jen, Grace, Allan, Mozzy, Sharif

Updates: Focus atm is on preparing for Ampath pipeline pilot release.

2021-01-28: Squad call

Recording: https://iu.mediaspace.kaltura.com/media/1_glpg4zts

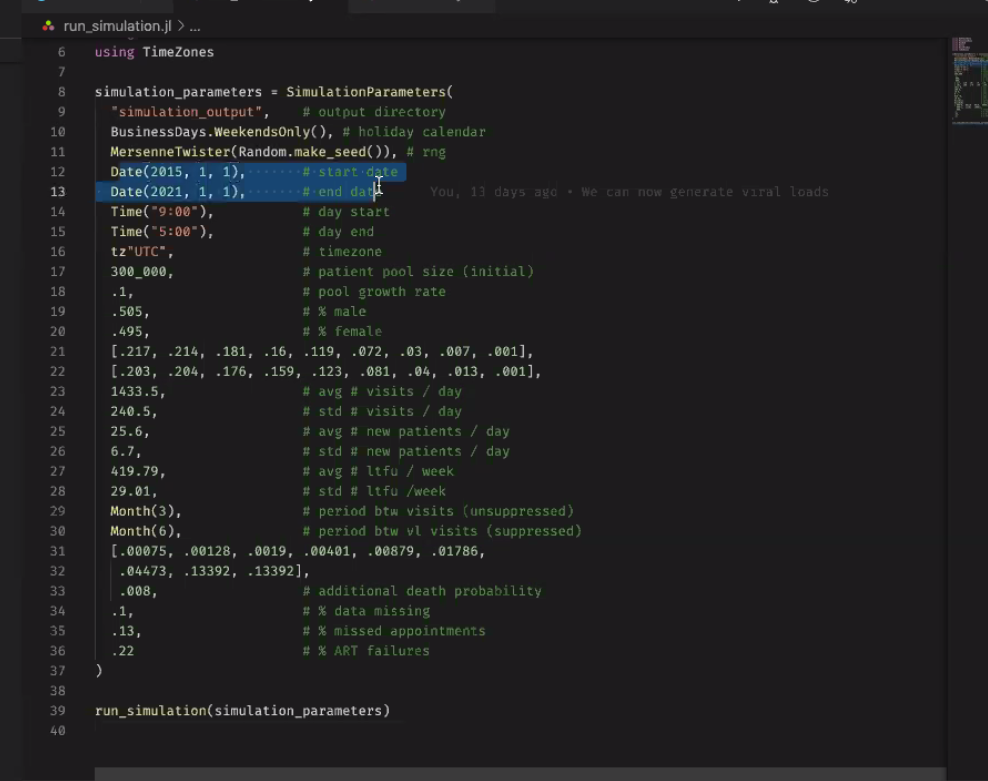

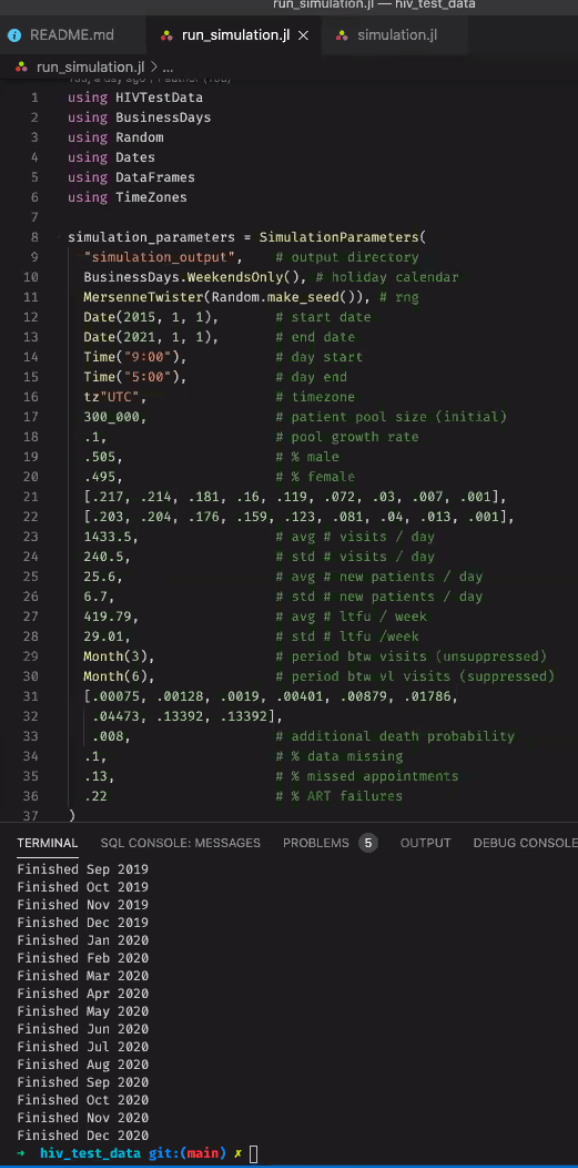

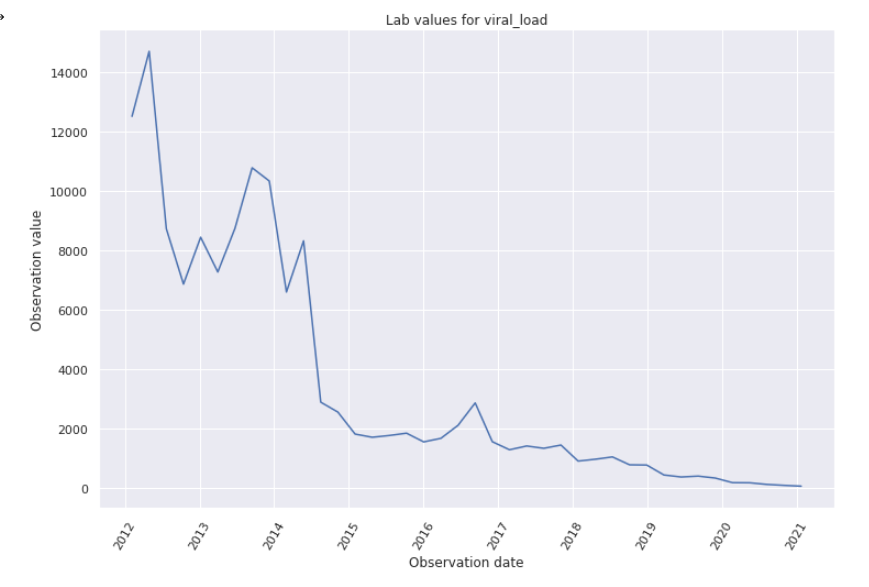

2021-01-21: Data Simulation Engine demo, Random Trended Lab Data Generation demo, and How to Use Spark demo

Recording: https://iu.mediaspace.kaltura.com/media/1_q8wr0m9h

Attendees: Burke, Bashir, Antony, Cliff, Daniel, Ian, Jacinta, JJ, Juliet, Justin Tansuwan, Piotr, Steven Wanyee, Grace

- Data Simulation Engine: Ian demo

- Engine that generates random patients and random VL values for them. E.g. simulation that runs for a time range of 5 years, to show people coming in and getting lab tests over the course of 5 years of visits:

- Built using data like LTFU, % of pts with a suppressed VL

- Steps through every simulated day and outputs a file

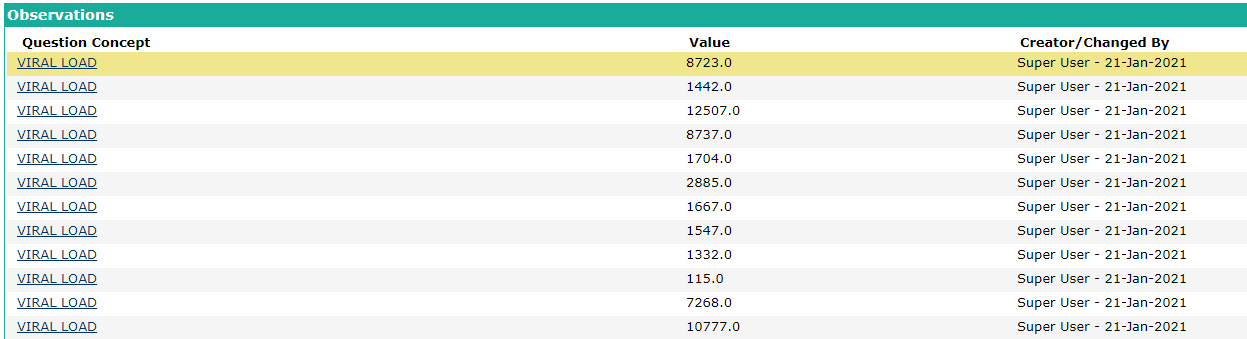

- Generating Random Lab Data: Grace/JJ demo

- Colab Notebook: https://colab.research.google.com/drive/1EMiAkbhW7TzEVlFRfUGFPIpBSgZ6GmSX#scrollTo=Uk-W16jgSf-f 5 min Demo Video: https://www.loom.com/share/ed62dc728e46401894a5cdc57ef28bd3

- Running through the Notebook generates and POSTs random, bounded, trended lab data over time. Example demo'd: Posting 40 Viral Loads

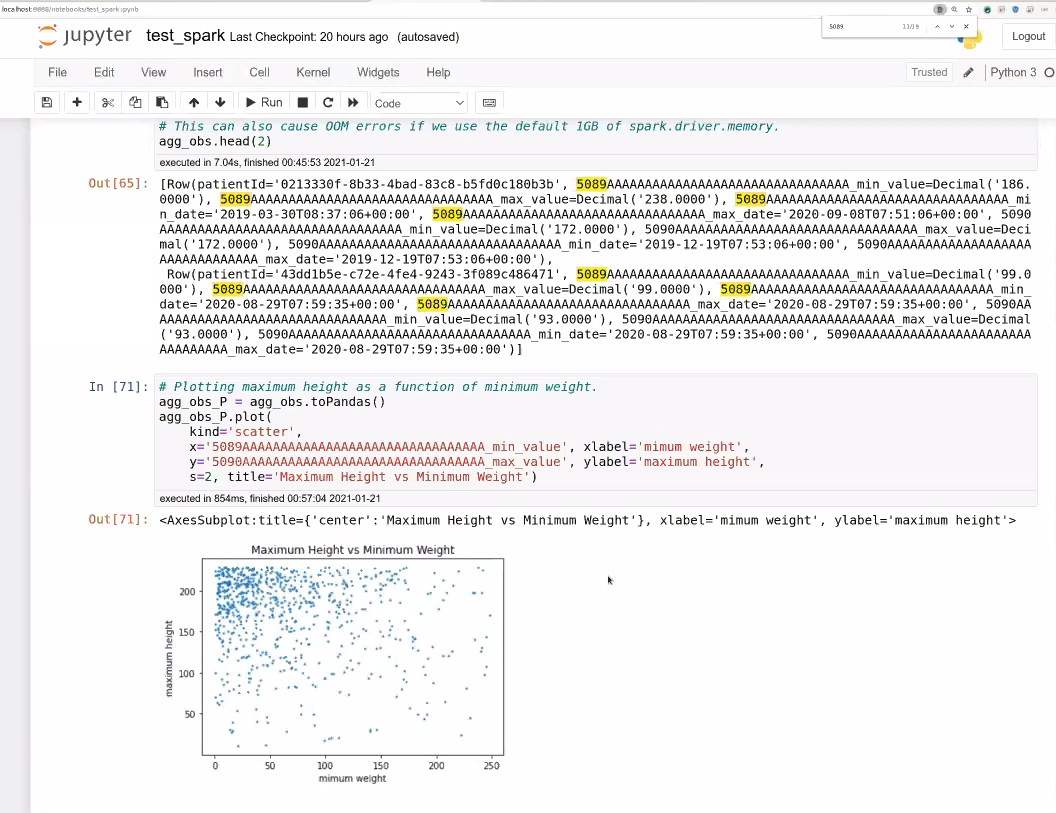

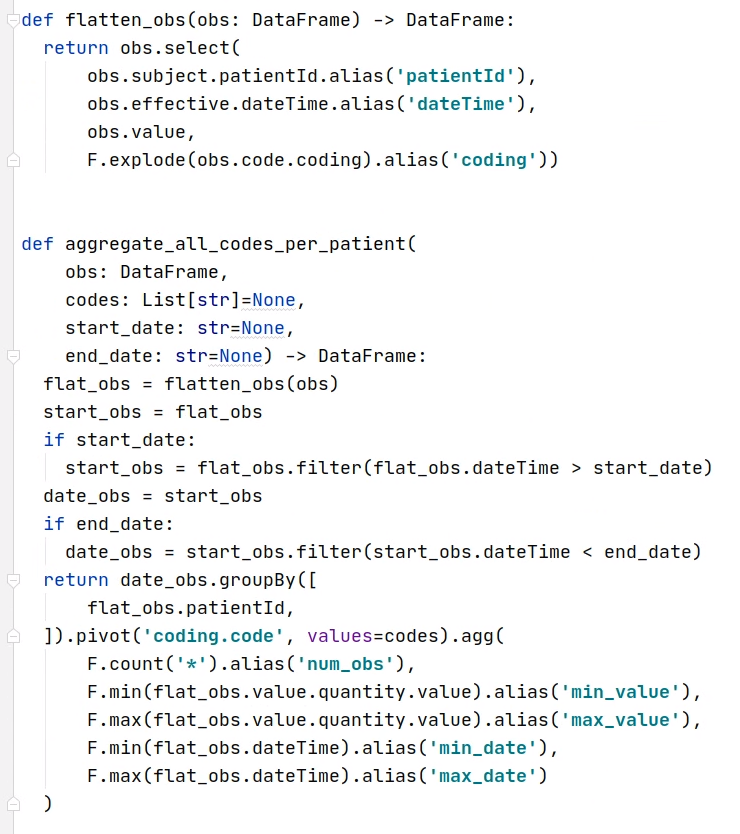

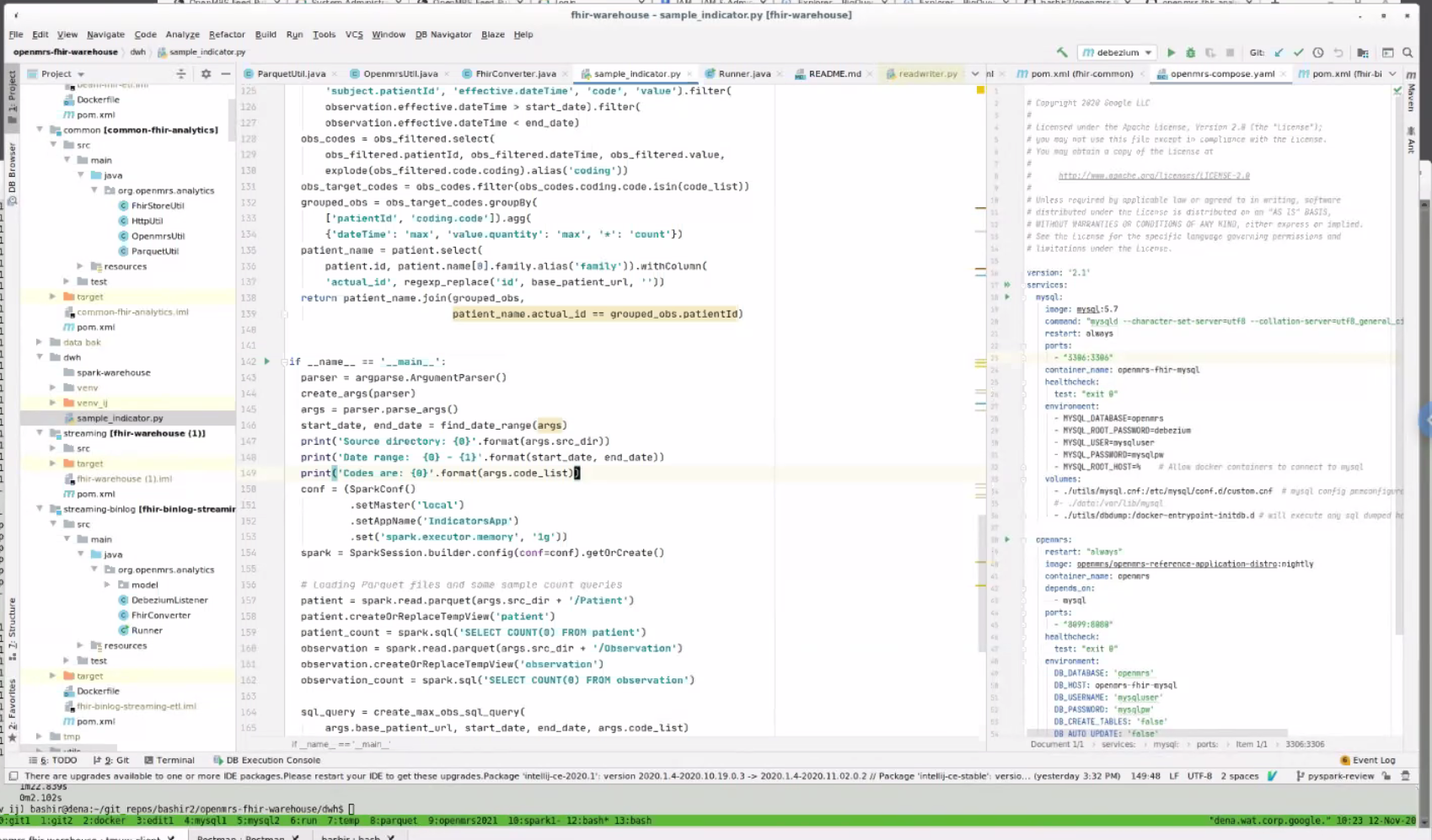

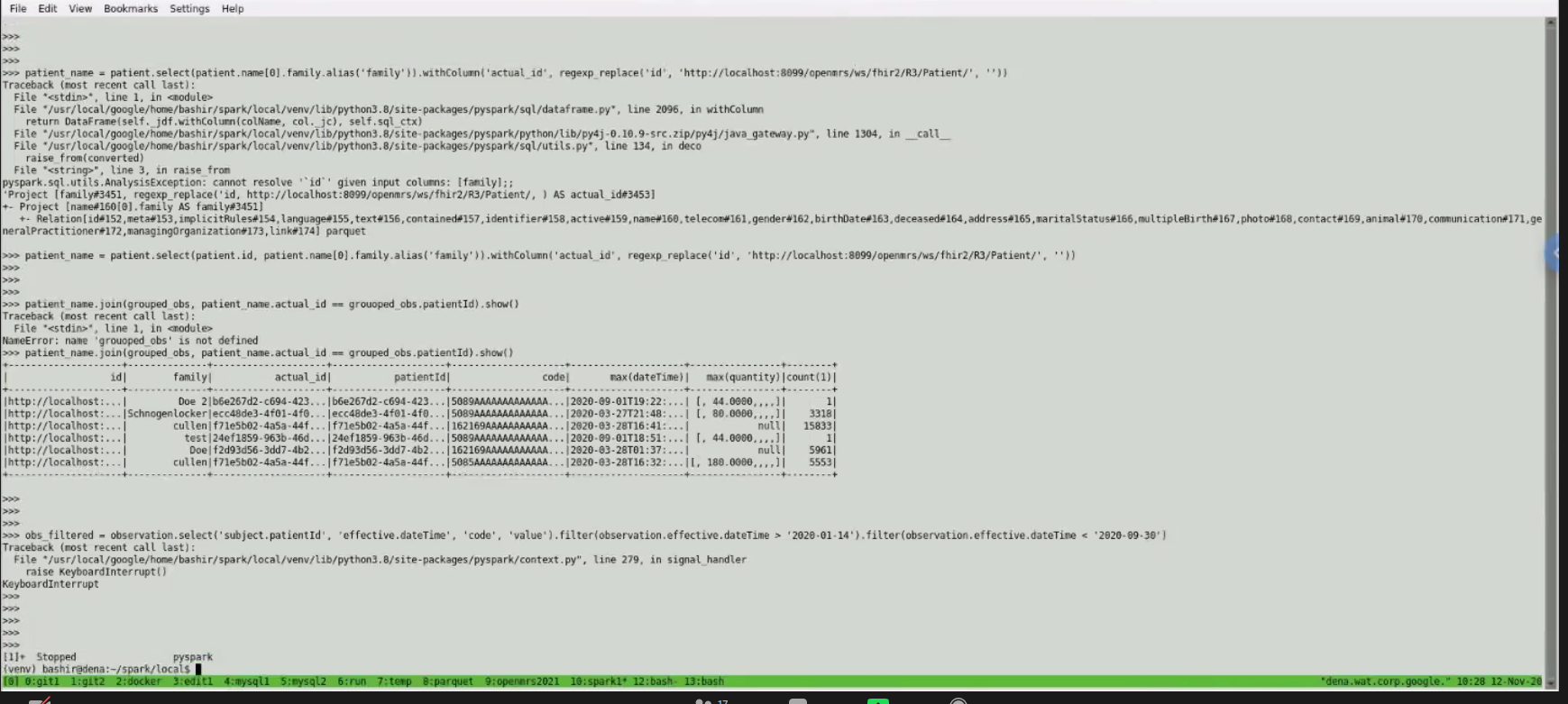

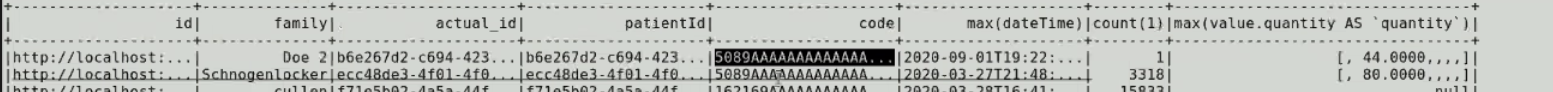

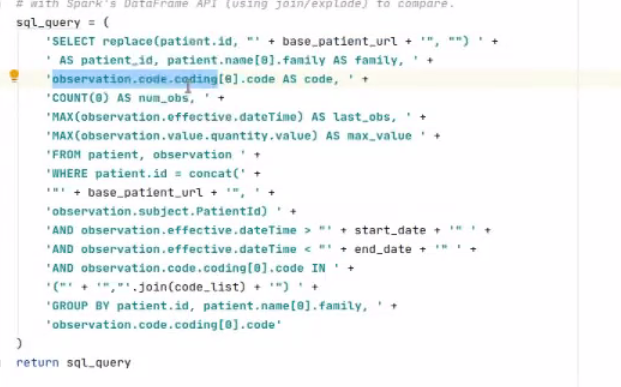

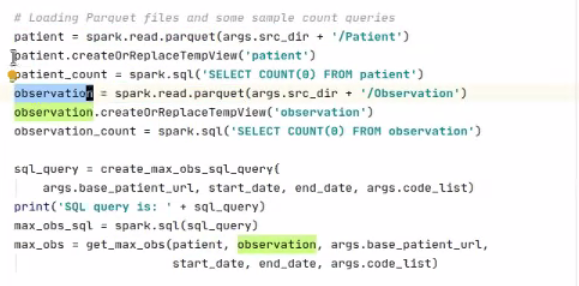

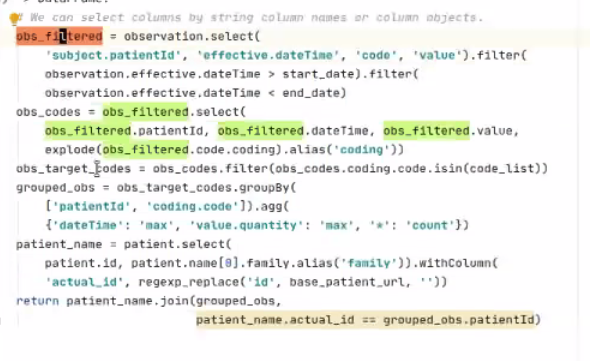

- How to Use Spark: Bashir demo

- Goal is to provide APIs over time to get base representations of the data that's being stored in FHIR for now. Bashir trying to show how this can flatten and remove the complexity of FHIR from what the person needs to understand to run the query.

- Changing stratification and time period could take minutes to an hour. E.g. when a PEPFAR Indicator requirement

2021-01-14

Attendees: ?

Top Key Points

- Discussed the question of whether to use SQL or Python to develop indicator logic and decided to use Python.

- Discussed how Spark deployment will be done and decided to focus on the local version (with many cores) for the time being.

2021-01-07

Attendees: Reuben, Ian, Burke, Kaweesi, Cliff, Jen, Allan, Bashir, Patric Prado, Kenneth Ochieng, Grace Potma

Top 3 Key Points

- Our Highest Priority Q1 Milestone: To continuously be running beside the current indicator calculation AMPATH is using, and to show they generate the same indicators

- Other Pressing Milestone: A second implementer - esp. one with multiple sites who want to generate analytics by merging them.

Notes:

- Updates: Bashir now moved from Google Cloud team to Google Health. Opens opportunity for him to continue contributing to OMRS for a few more quarters. OMRS has been given $5k in AWS credits; however, Google Cloud could have ~$20k available if we need it. Question is not "what to do with those credits" but "what do we need".

- E2E test progress: 1 component to each that hasn't been merged. First was test for being able to spin up a container - done.

should eventually support multiple modes (e.g., Parquet vs FHIR store, batch vs streaming); we can add them gradually.the last component to e2e

- Large data set: Ian working on simulator for this (takes time to work w/ realistic data!)

- 2021 Q1 Goals

- Main Goal (Highest Priority): To continuously be running beside the current indicator calculation AMPATH is using, and to show they generate the same indicators. Next steps: Implementing those 10 reference indicators.

- Other Goal: A second implementer - esp. one with multiple sites who want to generate analytics by merging them.

- Who to reach out to: PIH, Bahmni (JSS), Jembi, ITECH (iSantePlus in every hospital in Haiti - Piotr has been involved in analytics work b/c they're looking to aggregate data into one store)

- Value Prop: If you have multiple deployments and find that managing indicators takes repeated headaches to maintain - this is for you. You can get your indicators with little maintenance, and it should work at scale. If you have many sites, many patients, many obs, this is fast, will enable interactive queries that don't impact your production system performance. The beta is early so your feedback and your org's needs will allow the squad to ensure what we're building is applicable to your use case.

- Key points from 2020 Retrospective: https://docs.google.com/presentation/d/1f0VFO0eucWZYpIDOtm8smcCNWdW_7YjdhBWS7bdfTJ8/edit#slide=id.gb1d91779dd_0_733

Directory

Helpful Links

To Address Next Meeting

- Going through SQL vs DataFrame based queries in Spark

- Decide which way people prefer for indicator implementation (and other feedback)

Meeting Notes

2021-04-29:

Want to hear from other people; open new perspective. Without having a dedicated Product Manager, need reps from other teams to bring their problems and efforts to address. Otherwise without PM, dev leads can't take on burden of going around and understanding others' problems.

- PIH

- Using YAML and SQL to define exports that pull data from OpenMRS to a flat table that PowerBI can use

- OHRI - UCSF

- COVID

Ampath run updates

Dev updates

...

AES has (to date) been the combination of three organizations/projects working together on an analytics engine to meet their specific needs:

- AMPATH reporting needs

- PLIR needs

- Haiti SHR needs

MVP

- System that can replace AMPATH's ETL and reporting framework

TODO: Mike to create an example of "if we were to create a flat table, how would that look"

2021-04-15: Squad Call

https://iu.mediaspace.kaltura.com/media/t/1_80xjwir1

Attendees: Debbie, Bashir, Daniel, David, Burke, Ian, Jen, Allan, Natalie, Tendo, aparna, Mike S, Naman, Mozzy

Welcome from PIH: Natalie, Debbie, David, Mike

- M&E team get a spreadsheet emailed from Jet.

- Issues with de-duplicating patients.

- Datawarehouse created for Malawi being redone.

- Recent projects have taken different approach - M&E teams analyze the KPI requirements, adn then design the SQL tables they'd want, then they do an ETL table to have exactly what they'd want for PEPFAR reporting and MCH reporting.

- Past approach was too complicated; didn't promote data use.

Details of past situation

- Previously you'd go into OMRS and you'd export and download an Excel spreadsheet. Exports started to be more and more wrong. Wrong concept, or new concepts added that weren't reflected in exports; people lost trust. To see how things were calculated was very opaque.

- 7 health facilities into one DB; another 7 into another DB; then the 2 DBs merged together in reporting (which is done on the production server of one of those sets b/c there's not a 3rd server, but a new one is being set up for reporting.)

- They were hoping to set up on Jet but seems proprietary, expensive, and old; starting hosting clinical data in this 2 yrs ago. Started pulling in raw (or flattened?) tables - patient tables, enrollment tables. Pull those into the Jet DW

- COVID started and they also weren't able to travel to sites to do training on Jet.

- Switched back to Pentaho & MySQL - used before, open source. Hired 2 new people on Malawi team who were comfortable with that tool and could build their own warehouse in there.

- Main need: 1 table per form. So however concepts organized into a form, needs to make sense as a table unit. Each form - 1 table.

- Would like to add things like enrollment, statuses.

- Why? Forms = intuitive unit; indicator calc usually like obs at last visit,

- 80% Doesn't usually require cross-visit info

- KEMR similar - created a table to automate 1 tbl per form

- Previous tables were extremely wide because it was all the questions we'd ever asked. Would cut that up into multiple tables.

- PowerBI dashboards will be connected to these 1-form tables.

- Volume: Malawi: 50,000pts, a few million obs. Haiti: 500,000-1m, 10's millions of encounters, x4-5 for obs.

- Time: 5-10 mins to run. But in some other servers it's longer and a barrier, e.g. over an hour and increasing by the day - so that's why they're moving away from full DB flattening to one that's more targeted based on what data you're looking for.

- Ultimate Goal: Anything that makes it easier, esp. for local M&E people, to get their hands on the data and make the reports, is great. Easier access, and automation that reduces the workload of local M&E.

Overview:

- Targeting Ampath, because: 10's of clinics supported in a central DB. Has a complicated ETL system that doesn't scale very well, complex so few people understand it, and where the data came from is too opaque to instill sense of trust; want to replace it. M&E team needs flattened data. Want to flatten data into analytical tables that can be converted into tables like excel that they can run their own reports on.

- next, want to try supporting someone who is distributed, and then centralize the data.

- Design goals:

- Easy to use on a single machine/laptop. Whole system can be run on signle machine.

- ETL part: 2 modes of operations, batch mode and streaming mode.

- Reporting part:

Questions

- if you have any other questions for what might be useful for an M&E/Analytics person please email me at nprice@pih.org

- What are the best ways forward for PIH to try this and apply to some of their mor challenging use cases? E.g. local M&E teams not showing analytics of things happening over time

- Follow up to figure out how to create the FHIR resources...

2021-04-01: Squad Call

Attendees: Bashir, Moses, Tendo, Burke, Daniel Ian

Regrets: Allan, JJ, Grace P, Cliff

- Bashir: working on date-based filtering, as a way to get data only for "active" patients within a date range (e.g., all data for "active" patients within the past year)

- Given a list of resources and a date range, we pull all dated resources within the date range

- For all pulled resources that refer to a patient, we pull all data for each patient

- Moses: working on a blocker, inability to pull person and patient resources for the same individual in the same run (#144)

- Tendo: working on implementing media resource, working through complex handler

- Cliff (in absentia) working on running system via docker command

- Skipping meeting next week due to OpenMRS 2021 Spring ShowCase

2021-03-25: Squad Call

Attendees: Bashir, Allan, Cliff, Daniel, Ian, JJ, Allan, Ivan N, Willa, Grace P

Recording: https://iu.mediaspace.kaltura.com/media/t/1_msvydoso

Summary:

1. Decision: New design for date-based fetching in the batch pipeline, based on active period (for each patient "active" in care, all the resources will be extracted). We defined an "active" period (the last year), and break down the fetch into 2 parts: (1) any obs from the last year, then (2) for each patient with a history in the active period, all of their history will be downloaded. Why: Avoids search query time-out problems.

2. Later: Add ability to retrospectively extract resources back in time (re-run pipleline for any time period) in order to update your datawarehouse.

Updates:

- Bashir: Close to fix for memory issue; working on date-based

- Allan: Trying to finalize orchestration of indicator calculation. Implementing using docker & docker compose. Streaming mode.

- Antony (regrets): Working on list of indicators that need baseline data.

- Cliff: Streaming mode script. Refactoring in batch mode.

- Mike: Experimenting with approach, more updates next week.

Current plan for implementing date-based fetching: Getting the data when you need it, not all at once.

- Implemented on FHIR search base path. 2 modes for batch: pipeline JDVC mode, and FHIR search base.

- First step allows qualifying "I need last year"

- List of pts who've had >=1 obs in last year

- Second download, we download all of the resources going back.

- This will avoid the search query time-out problem we ran into in past. Idea is to download small batches in parallel.

Concern raised by JJ:

Approach needs to be able to handle queries that involve all patients, and need to be able to prove findings (i.e. can't simply use an aggregate deduction model, because you can't show "here are the exact patients" if someone needs to confirm your numbers are correct).

2021-03-18: Squad Call

Attendees: Bashir, Allan, Mike Seaton, Grace P, Christina, Burke, Cliff, Daniel, Ian, Ivan N

Recording: https://iu.mediaspace.kaltura.com/media/t/1_qj4xzv9t

Summary: What do we do with big databases with batch mode (where it's not feasible to download everything as FHIR resources or it will take a very long time)?

Two approaches came out to address the above problem:

1. First approach is limit by date (last week's discussion focused on this)

2. Second approach is to limit by patient cohort (focused on this today; idea is you'd limit queries based on patient instead of based on date, e.g. "Fetch everything for a cohort of patients") → We think this will be a better approach to try out moving forward.

Updates:

- PIH looking for Analytics Solutions, Mike's reviewed ReadMe, interested in trying out. Next: ______

- Bashir: Decision to do date-based separation of parquet files. Planning to go back to the FHIR-based search approach.

- Google contrib Omar: another 20%er from Google who is contributing PRs. Added JDBC mode to E2E tests.

- Google contrib William: still doing ReadMe changes/refactoring and move dev-related things under the doc directory.

- Allan: Instead of using distributed mode, use local mode.

- Cliff: Looking at datawarehousing, & issue of fhir resources in DW.

- Mozzy: Bug fixes so it can be used w/ Bahmni.

Discussion of Challenges:

FHIR structure isn't causing delays, but the conversion of resources into FHIR structure is slowing things down. TODO: Ian to look into improvements that could be made in FHIR module.

2021-03-11: Squad Call

Attendees: Bashir, Antony, Daniel, Grace, Allan, Moses, Tendo, Ojwang, Ken, Jen

Recording: https://iu.mediaspace.kaltura.com/media/t/1_lskh65vm

Summary: Discussed memory problems & performance in Ampath example. Direction we're taking for Ampath: To divide DW based on date dimension. For most of the resources, we don't generate for the whole time, just the last 1-2 yrs. Then we need to support special set of codes, where we generate the obs just for those codes. The set of those obs is much smaller.

- Work Update

- Bashir: Code reviews & investigation of memory issue. Added JSON feature into pipeline since it was helpful w/ debugging.

- Allan: Working to dockerize streaming mode, how to register indicator generation.

- Suggestion from Bashir: Adding more indicator calculation logic would be great.

- Moses: Upcoming PR w/ bug fixes

- Sending too many requests: had been looking at moving to using POST but fetching 100 resources at a time takes a few seconds, and can flood MySQL and OMRS, so doesn't see much value in fetching 1000's resources at a time on a local machine. 90 resources was actually faster.

- 2 other new contributors:

- Engineers at Google can spend 20% time on other projects. Posted some projects a few weeks ago related to this squad.

- Omar working on adding JDBC mode to batch end-to-end test

- William working on documentation fixes; trying to keep README user-centric rather than dev-centric. Anything dev-centric goes to separate files.

- Engineers at Google can spend 20% time on other projects. Posted some projects a few weeks ago related to this squad.

- Memory Issue with fetching encounter resources at AMPATH

- Compile list of IDs to be fetched; send 100-resource-request at a time to OMRS; convert those into Avro, and write into a parquet file. So because there is no shuffle anywhere, should continuously work regardless of the size of DB, and the memory requirement shouldn't increase.

- Investigated:

2021-03-04: Squad Call

Recording: https://iu.mediaspace.kaltura.com/media/t/1_yyg2els7

Attendees: Allan, Bashir, Moses, Antony, Cliff, Daniel, Ian, Jen, Ken, Sri Maurya, Steven Wanyee

Updates

- Bashir:

- Coverage reports added to repo.

- Bug identifying, & fix is out (not generating parquet; adding a flag to set number of shards)

- Fetching initial id list: Realized this isn't being slowed down by FHIR search or JDVC mode. Turns out it was the generation of Parquet - causing order of magnitude slower.

- Beam: Distributed pipeline environment. It's deciding how many segments for the output file to generate. Seems like an issue with Beam, Bashir to report to Beam.

- More Google people expected to start contributing eventually - up to 20 people

- Allan

- Moses

- Antony to follow up with Bashir and Moses to setup on machine & to join Tuesday check-ins

- Apparently there has been some testing of CQL against large data sets with Spark

- Intro from Maurya Kummamuru: Extracting OMRS data w FHIR to perform CQL queries against it. Demo coming at FHIR squad next week Tuesday.

2021-02-25: Squad Call

Recording: https://iu.mediaspace.kaltura.com/media/t/1_fa0o9440

Updates: Substantial bug fixing needed for Ampath pipeline pilot; 2.5hrs for table with 800,000 patients too slow.

2021-02-18: Squad call

Recording: https://iu.mediaspace.kaltura.com/media/t/1_m0rqigrl

Attendees: Burke, Tendo, Ian, Bashir, Amos Laboso, Cliff, Daniel, Jen, Grace, Allan, Mozzy, Sharif

Updates: Focus atm is on preparing for Ampath pipeline pilot release.

2021-01-28: Squad call

Recording: https://iu.mediaspace.kaltura.com/media/1_glpg4zts

2021-01-21: Data Simulation Engine demo, Random Trended Lab Data Generation demo, and How to Use Spark demo

Recording: https://iu.mediaspace.kaltura.com/media/1_q8wr0m9h

Attendees: Burke, Bashir, Antony, Cliff, Daniel, Ian, Jacinta, JJ, Juliet, Justin Tansuwan, Piotr, Steven Wanyee, Grace

- Data Simulation Engine: Ian demo

- Engine that generates random patients and random VL values for them. E.g. simulation that runs for a time range of 5 years, to show people coming in and getting lab tests over the course of 5 years of visits:

- Built using data like LTFU, % of pts with a suppressed VL

- Steps through every simulated day and outputs a file

- Generating Random Lab Data: Grace/JJ demo

- Colab Notebook: https://colab.research.google.com/drive/1EMiAkbhW7TzEVlFRfUGFPIpBSgZ6GmSX#scrollTo=Uk-W16jgSf-f 5 min Demo Video: https://www.loom.com/share/ed62dc728e46401894a5cdc57ef28bd3

- Running through the Notebook generates and POSTs random, bounded, trended lab data over time. Example demo'd: Posting 40 Viral Loads

- How to Use Spark: Bashir demo

- Goal is to provide APIs over time to get base representations of the data that's being stored in FHIR for now. Bashir trying to show how this can flatten and remove the complexity of FHIR from what the person needs to understand to run the query.

- Changing stratification and time period could take minutes to an hour. E.g. when a PEPFAR Indicator requirement

2021-01-14

Attendees: ?

Top Key Points

- Discussed the question of whether to use SQL or Python to develop indicator logic and decided to use Python.

- Discussed how Spark deployment will be done and decided to focus on the local version (with many cores) for the time being.

2021-01-07

Attendees: Reuben, Ian, Burke, Kaweesi, Cliff, Jen, Allan, Bashir, Patric Prado, Kenneth Ochieng, Grace Potma

Top 3 Key Points

- Our Highest Priority Q1 Milestone: To continuously be running beside the current indicator calculation AMPATH is using, and to show they generate the same indicators

- Other Pressing Milestone: A second implementer - esp. one with multiple sites who want to generate analytics by merging them.

Notes:

- Updates: Bashir now moved from Google Cloud team to Google Health. Opens opportunity for him to continue contributing to OMRS for a few more quarters. OMRS has been given $5k in AWS credits; however, Google Cloud could have ~$20k available if we need it. Question is not "what to do with those credits" but "what do we need".

- E2E test progress: 1 component to each that hasn't been merged. First was test for being able to spin up a container - done.

should eventually support multiple modes (e.g., Parquet vs FHIR store, batch vs streaming); we can add them gradually.the last component to e2e

- Large data set: Ian working on simulator for this (takes time to work w/ realistic data!)

- 2021 Q1 Goals

- Main Goal (Highest Priority): To continuously be running beside the current indicator calculation AMPATH is using, and to show they generate the same indicators. Next steps: Implementing those 10 reference indicators.

- Other Goal: A second implementer - esp. one with multiple sites who want to generate analytics by merging them.

- Who to reach out to: PIH, Bahmni (JSS), Jembi, ITECH (iSantePlus in every hospital in Haiti - Piotr has been involved in analytics work b/c they're looking to aggregate data into one store)

- Value Prop: If you have multiple deployments and find that managing indicators takes repeated headaches to maintain - this is for you. You can get your indicators with little maintenance, and it should work at scale. If you have many sites, many patients, many obs, this is fast, will enable interactive queries that don't impact your production system performance. The beta is early so your feedback and your org's needs will allow the squad to ensure what we're building is applicable to your use case.

- Key points from 2020 Retrospective: https://docs.google.com/presentation/d/1f0VFO0eucWZYpIDOtm8smcCNWdW_7YjdhBWS7bdfTJ8/edit#slide=id.gb1d91779dd_0_733